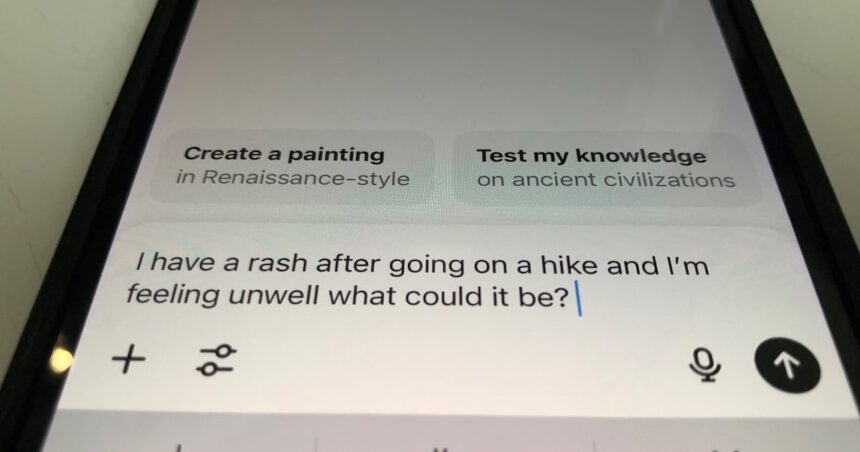

In an era where smartphones function as pocket doctors, Canadians are increasingly turning to artificial intelligence for medical advice—with potentially troubling consequences. A groundbreaking study from the University of Toronto reveals that ChatGPT and similar AI chatbots frequently provide inaccurate medical diagnoses, raising significant concerns about patient safety in this digital health frontier.

The research team, led by Dr. Amira Hassan, evaluated ChatGPT’s diagnostic capabilities against a panel of board-certified physicians using 50 common medical scenarios. The results were sobering: the AI tool correctly identified the primary diagnosis in only 63% of cases, compared to the human doctors’ 91% accuracy rate.

“What’s particularly concerning is not just the errors, but the confidence with which these AI systems deliver incorrect information,” Dr. Hassan told me during our interview at the university’s Health Sciences Complex. “ChatGPT presents its responses with an authoritative tone that could mislead patients into delaying proper medical care or pursuing inappropriate treatments.”

The study, published yesterday in the Canadian Medical Association Journal, highlights specific instances where ChatGPT’s advice could have resulted in serious harm. In one scenario involving symptoms of a pulmonary embolism—a potentially life-threatening blood clot—the AI suggested it was likely just anxiety and recommended rest rather than immediate medical attention.

Health Canada officials have taken notice of these findings. Deputy Minister Patricia Thompson confirmed to CO24 News that the agency is developing regulatory guidelines for medical AI applications. “We recognize the potential benefits of these technologies, but patient safety must remain paramount,” Thompson stated. “These tools simply cannot replace trained medical professionals.”

The research emerges amid a broader national conversation about healthcare access. With nearly 6.5 million Canadians lacking a family physician according to Statistics Canada’s latest data, many are seeking alternative sources of medical information. A separate survey conducted alongside the study found that 37% of Canadians have used AI tools for health concerns at least once in the past year.

Dr. Michael Chen, head of Digital Health Innovation at McGill University, who was not involved in the research, offered a more measured perspective. “These tools aren’t inherently dangerous if used appropriately,” he explained to CO24 Canada. “They can serve as an initial screening mechanism, but users need clear guidance on their limitations.”

The Ontario Medical Association has responded by launching a public awareness campaign emphasizing the importance of professional medical consultation. “We’re not dismissing technology’s role in healthcare,” said OMA President Dr. Samantha Lee. “But we need to ensure Canadians understand when and how to use these tools responsibly.”

The economic implications extend beyond patient safety. Canadian healthcare systems already strained by the pandemic could face additional burdens from delayed or incorrect self-diagnoses. Analysis from the Canadian Institute for Health Information suggests that inappropriate self-care measures inspired by online sources cost the system an estimated $267 million annually.

As Canada navigates this evolving healthcare landscape, the study authors recommend implementing clear warning messages within AI platforms about their limitations for medical use. They also suggest developing Canadian-specific training data that reflects our unique healthcare context and population demographics.

The research raises a profound question for our increasingly digitized society: in our rush to embrace convenient technological solutions, are we placing unwarranted trust in systems that aren’t yet equipped to handle matters of life and death?