In a closely watched legal confrontation that could reshape the burgeoning artificial intelligence industry, Getty Images and Stability AI squared off this week in London’s High Court, marking a pivotal moment in the ongoing tension between traditional content creators and AI developers.

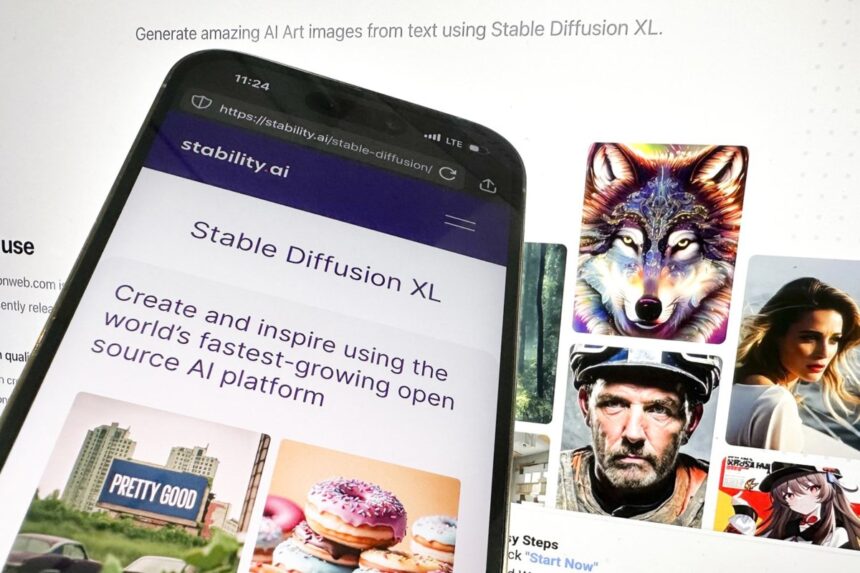

The dispute centers on Stability AI’s alleged use of millions of Getty’s copyrighted images to train its text-to-image generator Stable Diffusion without permission or compensation. Getty Images, one of the world’s foremost visual media companies, claims this unauthorized use constitutes a flagrant violation of intellectual property rights that undermines the creative economy.

“This case represents a fundamental principle: creative work has value and cannot simply be appropriated without consent,” said Getty’s legal representative during Tuesday’s preliminary hearing. “When AI companies harvest millions of copyrighted works to build commercial products, they must operate within established legal frameworks.”

The legal battle, which began in early 2023, has broader implications for the AI industry at large. As generative AI technologies increasingly permeate global markets, questions about copyright, fair use, and creative ownership have taken center stage in both boardrooms and courthouses worldwide.

Stability AI has maintained that its use of images falls under fair dealing provisions in UK law, arguing that the training process constitutes transformative use rather than direct reproduction. The company’s defense hinges on the technical nature of AI training, where images are processed as data rather than copied in their original form.

“Machine learning systems don’t store or reproduce the images they’re trained on,” explained Dr. Elena Mikhailova, an AI ethics researcher at the University of Toronto. “They extract patterns from vast datasets to generate new content. The question becomes whether this process constitutes a copyright infringement under existing laws that weren’t written with these technologies in mind.”

The legal proceedings have drawn significant attention from technology companies, creative professionals, and policy experts. Similar cases are unfolding in the United States, including separate litigation from Getty against Stability AI and a class action lawsuit against several AI companies filed by artists.

Mark Thompson, intellectual property attorney and consultant to several Canadian media companies, told CO24: “This case could establish precedent for how copyright law applies to AI training data across multiple jurisdictions. Courts are wrestling with balancing innovation against protecting creators’ rights in a technological landscape that’s evolving faster than legislation.”

The trial arrives at a crucial moment for the AI industry, which has seen explosive growth but faces increasing scrutiny from regulators and rights holders. Companies have raised billions in venture capital to develop increasingly sophisticated generative models, often using vast datasets of human-created content to train their systems.

Industry analysts estimate the generative AI market could reach $110 billion by 2030, but legal uncertainties threaten to complicate this trajectory. Some AI developers have already begun seeking licensing agreements with content providers, while others argue that overly restrictive interpretations of copyright law could stifle technological progress.

As the British court deliberates on this landmark case, creators and technologists alike are left wondering: will existing copyright frameworks bend to accommodate new technologies, or will AI companies be forced to fundamentally rethink how they train their models on human-created works?